I’m a developer who’s spent decades working with game engines and AI systems. And watching NPCs stand motionless in elaborate, carefully crafted virtual spaces felt like a waste. These worlds had 3D environments, physics, avatars, ambiance—everything needed for immersion except inhabitants that felt alive.

The recent explosion of accessible large language models presented an opportunity I couldn’t ignore. What if we could teach NPCs to actually perceive their environment, understand what people were saying to them, and respond with something resembling intelligence?

That question led me down a path that resulted in a modular, open-source NPC framework. I built it primarily to answer whether this was even possible at scale in OpenSimulator. What I discovered was surprising—not just technically, but about what we might be missing in our virtual worlds.

The fundamental problem

Let me describe what traditional NPC development looks like in OpenSimulator.

The platform provides built-in functions for basic NPC control: you can make them walk to coordinates, sit on objects, move their heads, and say things. But actual behavior requires extensive scripting.

Want an NPC to sit in an available chair? You need collision detection, object classification algorithms, occupancy checking, and furniture prioritization. Want them to avoid walking through walls? Better build pathfinding. Want them to respond to what someone says? Keyword matching and branching dialog trees.

Every behavior multiplies the complexity. Every new interaction requires new code. Most grid owners don’t have the technical depth to build sophisticated NPCs, so they settle for static decorations that occasionally speak.

There’s a deeper problem too: NPCs don’t know what they’re looking at. When someone asks an NPC, “What’s near you?” a traditional NPC might respond with a canned line. But it has no actual sensor data about its surroundings. It’s describing a fantasy, not reality.

Building spatial awareness

The first breakthrough in my framework was solving the environmental awareness problem.

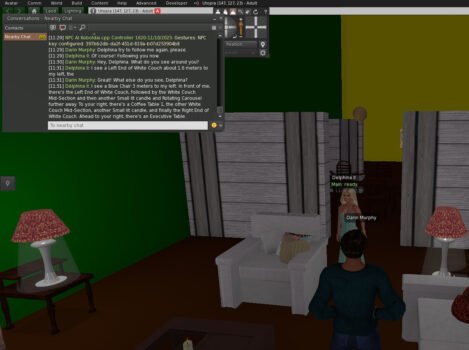

I built a Senses module that continuously scans the NPC’s surroundings. It detects nearby avatars, objects, and furniture. It’s measuring distances, tracking positions, and assessing whether furniture is occupied. This sensory data gets formatted into a structured context and injected into every AI conversation.

Here’s what that looks like in practice. When someone talks to the NPC, the Chat module prepares the conversation context like this:

AROUND-ME:1,dc5904e0-de29-4dd4-b126-e969d85d1f82,owner:Darin Murphy,2.129770m,in front of me,level; following,avatars=1,OBJECTS=Left End of White Couch (The left end of a elegant White Couch adorn with a soft red pillow with goldn swirls printed on it.) [scripted, to my left, 1.6m, size:1.4×1.3×1.3m], White Couch Mid-section (The middle section of a elegant white couch.) [scripted, in front of me to my left, 1.8m, size:1.0×1.3×1.0m], Small lit candle (A small flame adornes this little fat candle) [scripted, front-right, 2.0m, size:0.1×0.2×0.1m], Rotating Carousel (Beautiful little hand carved horse of various colored saddles and manes ride endlessly around in this beautiful carouel) [scripted, front-right, 2.4m, size:0.3×0.3×0.3m], Coffee Table 1 ((No Description)) [furniture, front-right, 2.5m, size:2.3×0.6×1.2m], White Couch Mid-section (The middle section of a elegant white couch.) [scripted, in front of me to my left, 2.6m, size:1.0×1.3×1.0m], Small lit candle (A small flame adornes this little fat candle) [scripted, front-right, 2.9m, size:0.1×0.2×0.1m], Right End of White Couch (The right end of a elegant white couch adored with fluffy soft pillows) [scripted, in front of me, 3.4m, size:1.4×1.2×1.6m], Executive Table Lamp (touch) (Beautiful Silver base adorn with a medium size red this Table Lamp is dark yellow lamp shade.) [scripted, to my right, 4.1m, size:0.6×1.0×0.6m], Executive End Table (Small dark wood end table) [furniture, to my right, 4.1m, size:0.8×0.8×0.9m]\nUser

This information travels with every message to the AI model. When the NPC responds, it can say things like “I see you standing by the blue chair” or “Sarah’s been nearby.” The responses stay grounded in reality.

This solved a critical problem I’ve seen with AI-driven NPCs: hallucination. Language models will happily describe mountains that don’t exist, furniture that isn’t there, or entire landscapes they’ve invented. By explicitly telling the AI what’s actually present in the environment, responses stay rooted in what visitors actually see.

The architecture: six scripts, one system

Rather than building a monolithic script, I designed the framework as modular components.

Main.lsl creates the NPC and orchestrates communication between modules. It’s the nervous system connecting all the parts.

Chat.lsl handles AI integration. This is where the magic happens—it combines user messages with sensory data, sends everything to an AI model (local or cloud), and interprets responses. The framework supports KoboldAI for local deployments, plus OpenAI, OpenRouter, Anthropic, and HuggingFace for cloud-based options. Switching between providers requires only changing a configuration file.

Senses.lsl provides that environmental awareness I mentioned—continuously scanning and reporting on what’s nearby.

Actions.lsl manages movement: following avatars, sitting on furniture, and navigating. It includes velocity prediction so NPCs don’t constantly chase behind moving targets. It also includes universal seating awareness to prevent awkward moments where two NPCs try to sit in the same chair.

Pathfinding.lsl implements A* navigation with real-time obstacle avoidance. Instead of pre-baked navigation meshes, the NPC maps its environment dynamically. It distinguishes walls from furniture through keyword analysis and dimensional measurements. It detects doorways by casting rays in multiple directions. It even tries to find alternate routes around obstacles.

Gestures.lsl triggers animations based on AI output. When the AI model outputs markers like %smile% or %wave%, this module plays the corresponding animations at appropriate times.

All six scripts communicate through a coordinated timer system with staggered cycles. This prevents timer collisions and distributes computational load. Each module has a clearly defined role and speaks a common language through link messages.

Intelligent movement that actually works

Getting NPCs to navigate naturally proved more complex than I expected.

The naive approach—just call llMoveToTarget() and point at the destination—results in NPCs getting stuck, walking through walls, or oscillating helplessly when blocked. Real navigation requires actual pathfinding.

The Pathfinding module implements A* search, which is standard in game development but relatively rare in OpenSim scripts. It’s computationally expensive, so I’ve had to optimize carefully for LSL’s constraints.

What makes it work is dynamic obstacle detection. Instead of pre-calculated navigation meshes, the Senses module continuously feeds the Pathfinding module with current object positions. If someone moves furniture, paths automatically recalculate. If a door opens or closes, the system adapts.

One specific challenge was wall versus furniture classification. The system needs to distinguish between “this is a wall I can’t pass through” and “this is a chair I might want to sit in.” I solved this through a multi-layered approach: keyword analysis (checking object names and descriptions), dimensional analysis (measuring aspect ratios), and type-based classification.

This matters because misclassification causes bizarre behavior. An NPC trying to walk through a cabinet or sit on a wall looks broken, not intelligent.

The pathfinding also detects portals—open doorways between rooms. By casting rays in 16 directions at multiple distances and measuring gap widths, the system finds openings and verifies they’re actually passable (an NPC needs more than 0.5 meters to fit through).

Making gestures matter

An NPC that stands perfectly still while talking feels robotic. Real communication involves body language.

I implemented a gesture system where the AI model learns to output special markers: %smile%, %wave%, %nod_head%, and compound gestures like %nod_head_smile%. The Chat module detects these markers, strips them from visible text, and sends gesture triggers to the Gestures module.

Processing Prompt [BLAS] (417 / 417 tokens)

Generating (24 / 100 tokens)

(EOS token triggered! ID:2)

[13:51:19] CtxLimit:1620/4096, Amt:24/100, Init:0.00s, Process:6.82s (61.18T/s), Generate:6.81s (3.52T/s), Total:13.63s

Output: %smile% Thank you for your compliment! It’s always wonderful to hear positive feedback from our guests.

The configuration philosophy

One principle guided my entire design: non-programmers should be able to customize NPC behavior.

The framework uses configuration files instead of hard-coded values. A general.cfg file contains over 100 parameters—timer settings, AI provider configurations, sensor ranges, pathfinding parameters, and movement speeds. All documented, with sensible defaults.

A personality.cfg file lets you define the NPC’s character. This is essentially a system prompt that shapes how the AI responds. You can create a friendly shopkeeper, a stern gatekeeper, a scholarly librarian, or a cheerful tour guide. The personality file also specifies rules about gesture usage, conversation boundaries, and sensing constraints.

A third configuration file, seating.cfg, lets content creators assign priority scores to different furniture. Prefer NPCs to sit on benches over chairs? Configure it. Want them to avoid bar stools? Add a rule. This lets non-technical builders shape NPC behavior without touching code.

Why this matters

Here’s what struck me while building this: OpenSimulator has always positioned itself as the budget alternative to commercial virtual worlds. Lower cost, more control, more freedom. But that positioning came with a tradeoff. It has fewer features, less polish, and less sense of life.

Intelligent NPCs change that equation. Suddenly, an OpenSim grid can offer something that commercial platforms struggle with, which is NPCs built and customized by the community itself, shaped to fit specific use cases, deeply integrated with regional storytelling and design.

An educational institution could create teaching assistants that actually answer student questions contextually. A roleplay community could populate its world with quest givers that adapt to player choices. A commercial grid could deploy NPCs that provide customer service or guidance.

The technical challenges are real. LSL has a 64KB memory limit per script, so careful optimization is necessary. Scaling multiple NPCs requires load distribution. But the core concept works.

Current state and what’s next

I built this framework to answer a fundamental question: can we create intelligent NPCs at scale in OpenSimulator? The answer appears to be yes, at least for single NPCs and small groups.

The framework is production-ready for single-NPC deployments in various scenarios. I’m currently testing it with multiple NPCs to identify scaling optimizations and measure actual performance under load.

Some features I’m considering for future development:

- Conversation memory – Storing interaction history so NPCs remember previous encounters with specific avatars

- Multi-NPC coordination – Allowing NPCs to be aware of each other and coordinate complex behaviors

- Voice synthesis – Giving NPCs actual spoken voices instead of just text

- Mood modeling – Tracking NPC emotional states that influence responses and behaviors

- Learning from interaction – Using feedback to improve navigation and social responses over time

But the most exciting possibilities come from the community.

What happens when educators deploy NPCs for interactive learning? When artists create installations featuring characters with distinct personalities? When builders integrate them into complex, evolving storylines?

Testing and real-world feedback

I’m actively looking to understand whether there’s genuine interest in this framework within the OpenSim community. The space is admittedly niche — virtual worlds are no longer a mainstream media topic — but within that niche, intelligent NPCs could be genuinely transformative.

I’m particularly interested in connecting with grid owners and educators who might want to test this. Real-world feedback on performance, use cases, and technical challenges would be invaluable.

How do NPCs perform with multiple simultaneous conversations? What happens with dozens of visitors interacting with an NPC at once? Are there specific behaviors or interactions that developers actually want?

This information would help me understand what features matter most and where optimization should focus.

The bigger picture

Building this framework gave me a perspective shift. Virtual worlds are often discussed in terms of their technical capabilities, such as avatar counts, region performance, and rendering fidelity. But what actually makes a world feel alive is the presence of intelligent inhabitants.

Second Life succeeded partly because bots and NPCs added texture to the experience, even when simple. OpenSimulator has never fully capitalized on this potential. The tools have always been there, but the technical barrier has been high.

If that barrier can be lowered, if grid owners can deploy intelligent, contextually-aware NPCs without becoming expert scripters, it opens possibilities for more immersive, responsive virtual spaces.

The question isn’t whether we can build intelligent NPCs technically. We can. The question is whether there’s enough community interest to make it worthwhile to continue developing, optimizing, and extending this particular framework.

I built it because I had to know the answer. Now I’m curious what others think.

The AI-Driven NPC Framework for OpenSimulator is currently in active development and I’m exploring licensing models and seeking genuine community and educational interest to inform ongoing development priorities. If you’re a grid owner, educator, or developer interested in intelligent NPCs for virtual worlds, contact me at [email protected] about your specific use cases and requirements.